USING NATURAL LANGUAGE PROCESSING TO UNDERSTAND DRAKE’S LYRICS

Article by Brandon Punturo

// Introduction:

Every couple of years there is an artist who seems to take the world by storm. In the past, this has been The Beatles and Michael Jackson, among others. These artists have the intrinsic ability to influence millions with their creative genius. It seems that when we started the second decade of the 21st century, a multitude of artists were jockeying to be number one. However, perhaps unexpectedly, a Toronto native by the name of Aubrey Graham ascended to the top under the stage name “Drake.”

Drake’s original claim to fame was from his role on the popular teen sitcom “Degrassi: The Next Generation” in the early 2000s. However, Drake left the show when he figured he wanted to become a rapper. Lil Wayne, one of the most influential rappers at that time, made the Toronto Native his protege. After signing with Wayne’s record, Young Money Entertainment, Drake released his first Studio Album, So Far Gone. It was certified Platinum and expedited Drake’s rapid ascent to the top of the hip hop world. Over the course of the next eight years he dropped four additional studio albums, a mixtape, and a playlist, with Scorpion being his most recent release (source).

We know for a fact that Drake’s work is popular but why are the majority of his songs such a hit? Is it the production? Is it the marketing? It is probably a combination of factors. However, the aspect I will be focusing on is his lyrics. Drake’s work is expansive and well-documented, so getting text data was not a difficult task. However, figuring out how to analyze it was. But thanks to recent improvements in NLP (Natural Language Processing), analyzing text data is now easier than ever.

According to Wikipedia, Natural Language Processing (NLP) “ is an area of computer science and artificial intelligence concerned with the interactions between computers and human (natural) languages, in particular how to program computers to process and analyze large amounts of natural language data.” NLP is the most interesting field of machine learning in my opinion. Text is produced in so many different forms, its gives us so much data to work with.

In the past 5–10 years, NLP has seen rapid development, coinciding directly with the rise of deep learning. Neural Nets have become a common framework for a myriad of NLP algorithms. Today, there are a diverse set of tools available so practitioners can solve a plethora of NLP problems. These tools have allowed me to examine Drake’s Lyrics.

Sourcing the Lyrics:

Before jumping into actual analysis, I had to get my hands on Drake’s Lyrics. Although there are several online lyric resources, I decided to use Genius.com. For those who are unaware, Genius is a website that annotates song lyrics. Genius has a wonderful API that is quite easy to use.

// Part I

Which Drake Song has the most unique words?

One of the things that Drake often gets criticized for is his creativity -or lack thereof. In the past he has been accused of stealing other rapper’s flows and having ghost writers . I set out to see out to see if his critic’s complaints were warranted.

The inspiration to use number of unique words per song was taken from this beautiful article that visualized the largest vocabularies in rap. In my opinion, total words is a subpar measure of creativity due to the repetitiveness of today’s artists.

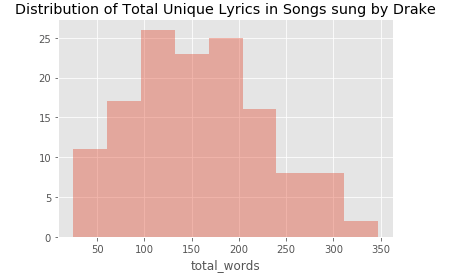

Once I finished cleaning the text data, I then began analyzing the unique number of lyrics in every song. Below is a histogram of the distribution of unique lyrics in all of Drake’s songs. It seems that the majority of his songs have between 100 and 200 unique words. Without a reference to the distribution of other artists songs, this histogram does not tell me much about Drake’s creativity.

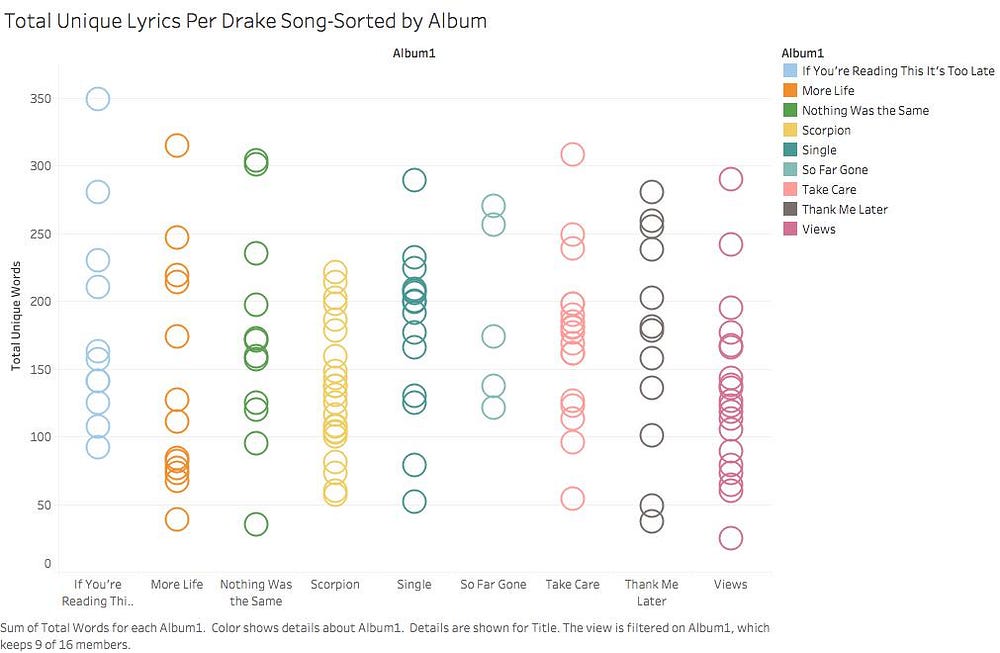

A better way to plot my findings was to look at his creativity by album. The plot, pictured below, was created in Tableau. On the x-axis is the name of the work. The y- axis represents the number of unique words. Each Bubble represents a song. No album seems to be substantially more creative (in terms of unique lyrics). However, it seems that every work has at least one outlier in terms of number of unique lyrics (minus Scorpion). It is kind of fascinating to see that songs on Scorpion, his most recent release, have little variation in number of unique lyrics despite being such a massive album (25 songs).

Now, to answer the question, which song has the most unique lyrics? The answer seems to be 6PM in New York. The rest of the top 10 is below.

// Part II

Named Entity Recognition

Named Entity Recognition is “a subtask of information extraction that seeks to locate and classify named entities in text into pre-defined categories such as the names of persons, organizations, locations, expressions of times, quantities, monetary values, percentages, etc.” (Wikipedia). NER is a particularly tricky task. The complexity of the English Language makes it very difficult to create a NER algorithm that is accurate for all sources of text. An algorithm may perform very well on one corpus (the set of Drake songs in our case) of text, and then perform poorly on another. This inconsistency makes it necessary to try out several NER algorithms. As you will see, algorithms are not very inaccurate.

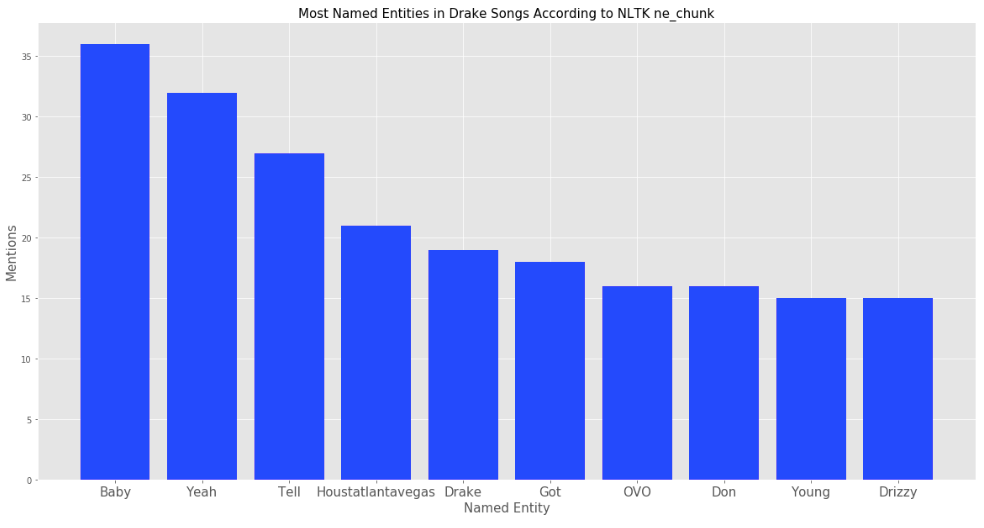

The first one I implemented was the Named Entity Algorithm provided by NLTK. “Ne_chunk” uses a list of words with Part Of Speech tags (POS tagging) assigned to them to infer what words are Named Entities. As you can see from the results I found, NLTK’s algorithm does not do a very good job on its own.

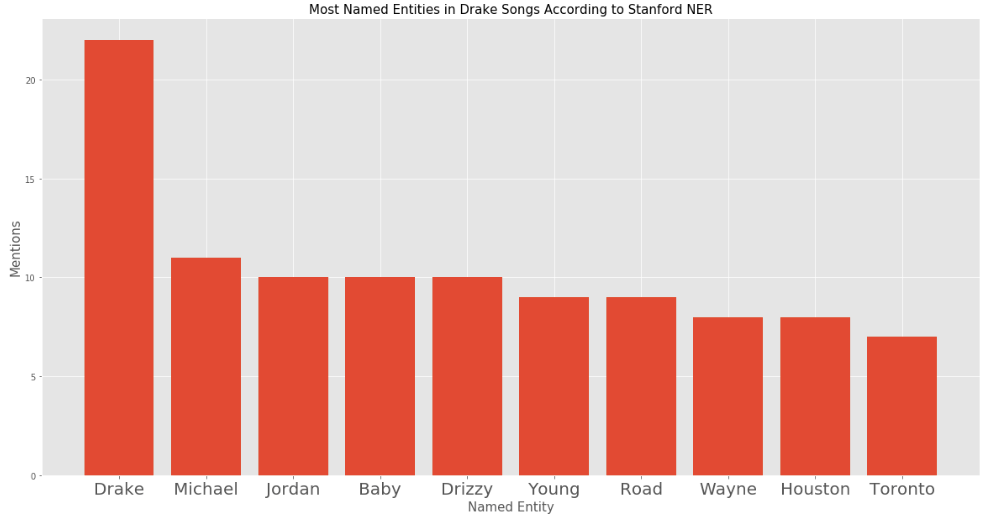

The second Named Entity Algorithm that I tried was the one produced by Stanford. Stanford’s Computational Linguistics Department is arguably the most prestigious in the world. One of the multitude of impressive tools developed out of this esteemed department was their NER tool.

Compared to NLTK’s algorithm, this tool takes a much longer amount of time to run, but it also produces much more accurate results. Although it is not perfect, it is a massive improvement.

// Part III

Topic Modeling:

One of the most interesting disciplines within NLP is topic modeling. A topic model is “is a type of statistical model for discovering the abstract “topics” that occur in a collection of documents. Topic modeling is a frequently used text-mining tool for discovery of hidden semantic structures in a text body.”(source) There are several prominent algorithms for topic modeling. Among the most prominent are Explicit Semantic Analysis and Non-Negative Matrix Factorization. However, the one I chose to use for the purpose of this article was Latent Dirichlet Allocation (LDA). LDA is a generative statistical model developed by Andrew Ng, Michael I. Jordan and David Blei. Basically it works by first learning the representation of a fixed number of topics in a given corpus. Given this number of topics, LDA learns the topic distribution that each document in a corpus has.

Topic Modeling all Drake Lyrics

One of the first things I wanted to use LDA for was to learn the most prominent topics in all of Drake’s songs. In order to accomplish this, I put all songs into a list. Then, using SciKitLearn’s CountVectorizer, I created a Bag Of Words Representation of all these songs. Bag of Words is a simple way of representing words through a matrix representation (link). Then, using SciKit learn’s version of LDA, I fit a model with the objective of finding 8 topics within the given text.

Visualizing the Topics

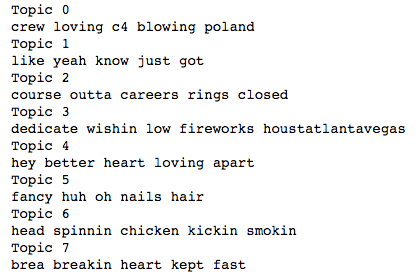

I found be two viable paths to visualize the LDA model. The first was through a function I wrote. Basically it outputs the most prominent words from every topic.

The results here are interesting but only provide me a moderate amount of information. Clearly Topic 7 is different than Topic 2 but not enough information is provided to tell me how different they are.

The auxiliary topics do not provide enough information to differentiate one from another. For this reason, I formulated another way to display topics within the text.

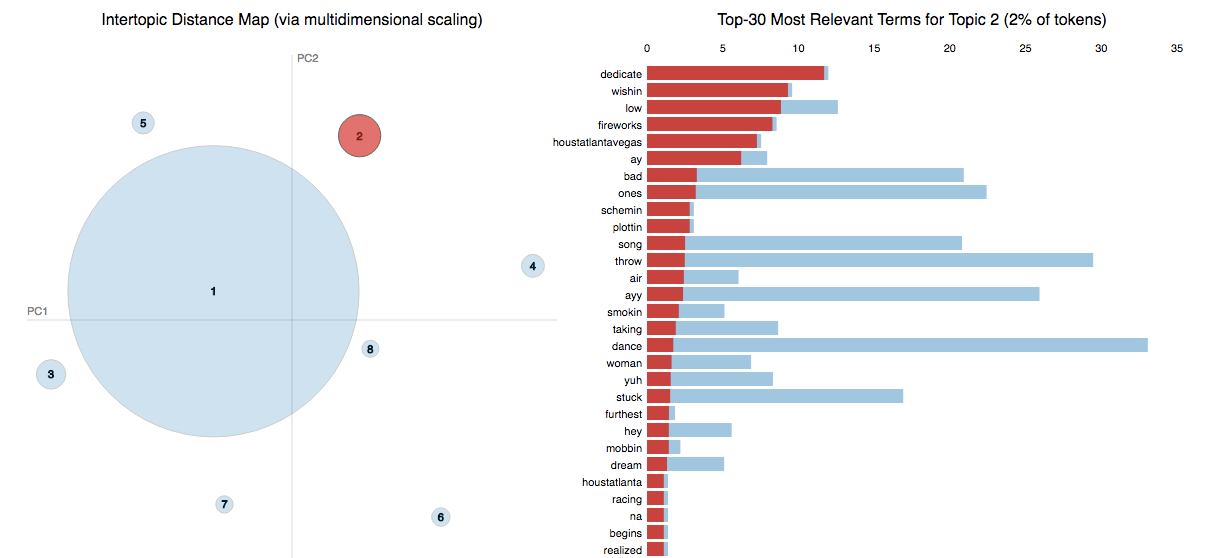

Within Python, there is a wonderful library called pyLDAvis. It is a specialized library that uses D3 to aid in the visualization of the topics created by the LDA model. D3 is arguably the best visualization tool out there. However, it is for Javascript users. Having this plugin is very useful for someone who does not know JavaScript very well. To visualize the data, the library uses dimensionality reduction. Dimensionality reduction compresses a data set with many variables into a smaller amount of features. Dimensionality Reduction techniques are extremely useful for visualizing data as it can compress data into two features. For my particular visualization, I decided it was best to use T-SNE (T-Distributed Stochastic Neighbor Embedding) for dimensionality reduction.

It seems from the fitting of my model, the majority of Drake’s lyrics can be classified into one massive topic that occupies the majority of the graph. The rest of the topics are diminutive by comparison.

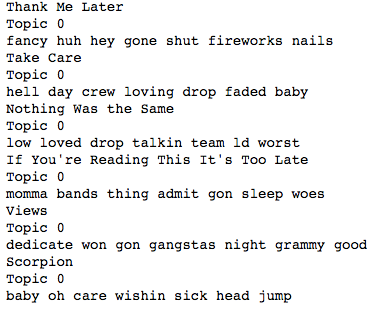

What did the topics looks like for all of Drake’s major releases?

In order to do this, I followed the same steps as before, except ran the LDA algorithm to find exactly one topic for each album. I then used a function I defined earlier to display the most prominent words for all his major works.

// Conclusion:

Drake is arguably the most popular artist in the world. By the time he decides to retire, he’ll be one of the most accomplished rappers ever. As a result, whenever he releases a new song or album, a palpable amount of buzz is certain to follow. His work almost always ends at the top of popularity charts. As a result, his lyrics instantaneously become staples of Instagram and Facebook captions for weeks. His songs are memorable, and his lyrics are a major reason.

In terms of my first NLP project, I would deem this to be a success. I felt like through the work done here, I got a more concrete understanding of Drake’s lyrics. Although there are certainly a bevy of other NLP tasks I could play around with for future work, Topic Modeling and Named Entity Recognition are a good starting point.

Thanks for reading this! If you have any suggestions for future work or critiques of my work, feel free to comment or reach out to me.

Something to note: I removed the expletives from the song lyrics to make this article more family-friendly.

Featured Image via Trace TV